Esoteric Update #296 - Refactoring And Simulating Physical Media (Pictures Inside)

This update will be a long one, with some pictures lower down.

I hope everyone's having a good end of the year. I can't say I am, but that's normal now. This and my birthday are when I feel worse, I think. Anyway, I've been a bit busy with medical stuff these last weeks, but I still managed to get a lot done. Unfortunately, It's a bit of a digression from the adventure, but it'll pay off in the long run.

So, I guess I can divide this into two parts: I've been doing some major refactoring and significant work on image transformations. Let's start with the first.

I wanted to revisit three parts of the engine, one because I was getting errors and two more because maintaining them was starting to be a bit of a problem. Regarding the errors, there were problems with the engine's IO where valid operations would cause it to crash when attempting to create new files. I reviewed the whole IO and think it's fully stable now. The problem was specifically related to Windows not automatically creating the full directory path when creating a file. I thought I had it sorted out before, but there were specific functionalities added later on that did not correctly handle this.

As for the refactoring, I split the whole draw command into separate sub-files, as while it is a single command, it uses a separate syntax to define what you want it to draw. That was relatively easy. The second part is the main parser for the engine. For the longest time, I've been using switch statements for API parsing as in C#, the way it's set up, these would be transformed into Binary Search Trees at compile time. However, this has two issues: the first is that lookup has an O(logn) complexity, and the second is that they are opaque and static. You can't look at what's there and change it; after compilation, they are just a single function. So, I pivoted to explicitly defined Hash Maps for token resolution. Not only does this bring them down to O(1) lookup time (which also has a practical effect of making token resolution faster), but it also leaves me with an examinable set of tokens. There is some more work related to defining them, as it won't warn me about duplicates automatically, but overall, I think this is good for the engine's health. I've not yet fully finished doing the conversion, but interestingly, it's possible to have an operational, partial conversion, and that's what I have now (around 40% of the whole API).

Anyway, some of this was provoked by a concept I've been working on (and functionally finished, but it's not yet active in-game) concerning procedurally generated content. Because the game relies on a free-form saving system (as in, there are no clearly defined save slots, which would make everything so much easier), the only easy way to store procedurally generated content would be to shove it all into the save file and then also just keep it in memory when a save is loaded. While this is fine for smaller bits of content, I'd prefer not to be limited in this way. As such, I've opted to implement a handling strategy for procedurally generated elements that works with the saving scheme. I won't bore you with details, but the game can now track what files are needed for what saves and will remove procedurally generated files only when exactly zero save files reference them. So it's basically garbage collection for the hard drive. What kind of procedural content, though? Well, anything, really. From code generated for specific situations/save files, through images or even maps.

Right, let's move on to the images then. The theme today is simulating printed (and drawn) media. The topic isn't quite closed yet, but I need a break from it before I get back. Now, overall, this required a lot of different pieces, but I'll just demonstrate some output examples and explain what they are for — no under-the-hood/intermediate stuff.

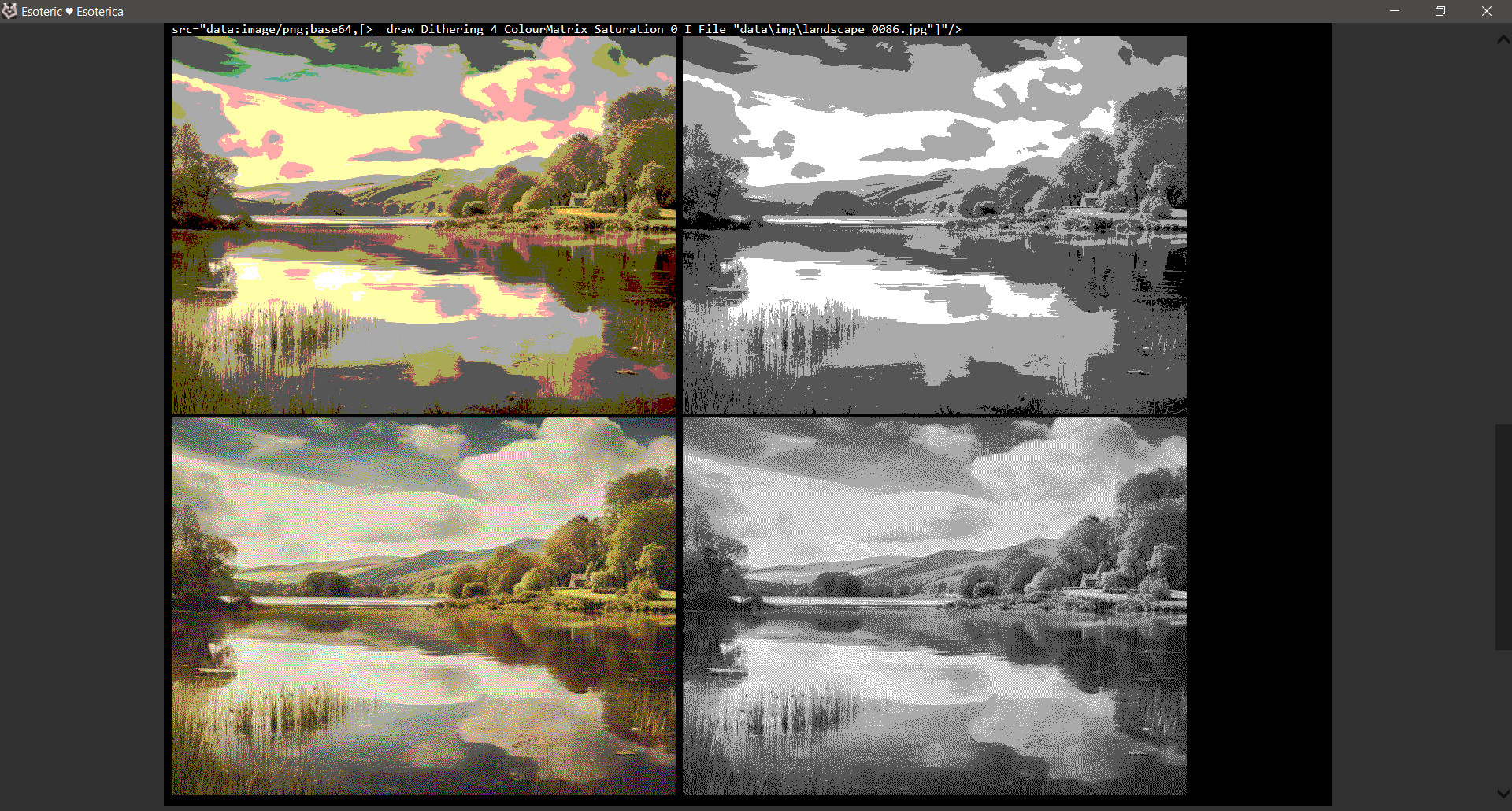

First off, we have colour depth reduction. These images reduce colours to 4 per channel, giving us 64 colours for the RGB images and just 4 for the B&W images. The upper pictures use raw colour quantization, while the lower images combine that with dithering to improve the appearance. These techniques are... not very useful, but this is because they are the basis for more refined approaches coming up in a moment.

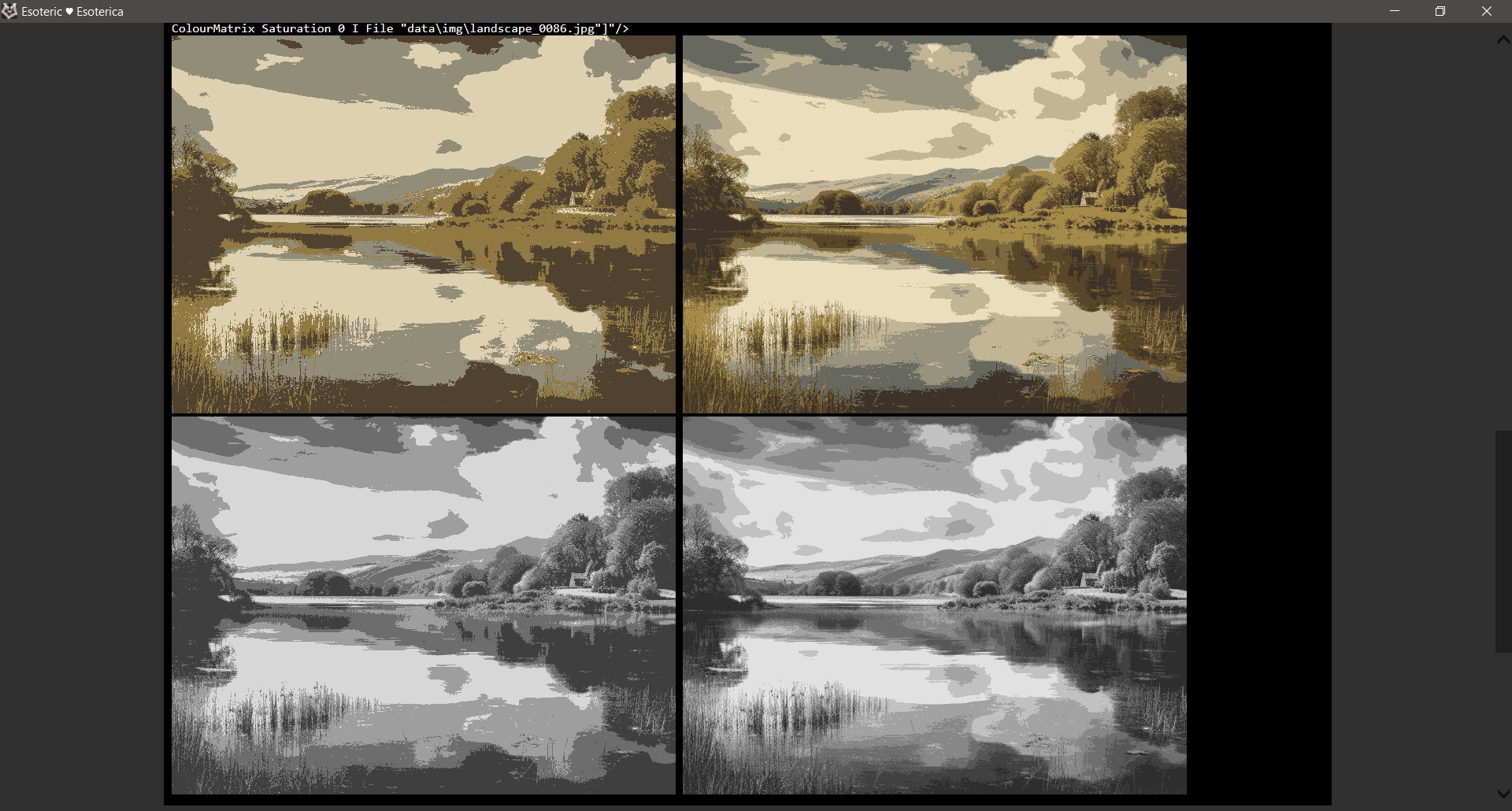

Then, we have a more bespoke form of quantization that selects and uses several representative colours for the image. These use 4 or 8 colours, regardless of being RGB or B&W.

Like the set above, however, this uses Dithering to spread and mix the colours. This technique helps represent printing where only a limited number of colours can be used, particularly if some blur is applied to simulate colour bleeding; in this case, no blur was used to let you take a closer look at the results (the images are pixel-accurate).

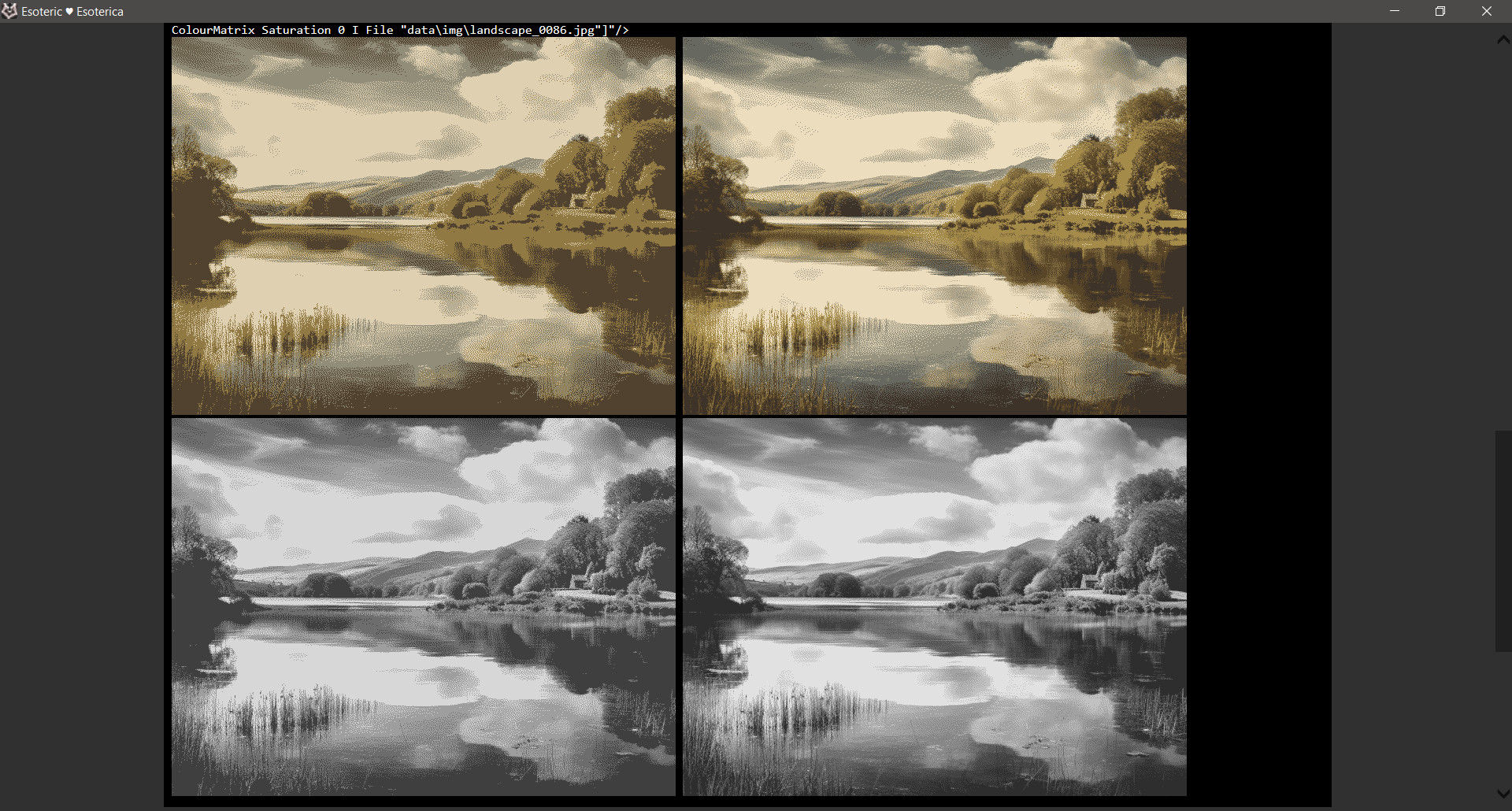

Now for a little side tangent. These images use a crosshatching transformation; the upper uses strokes, and the lower uses stipping (really, what is a dot if not a short and chunky line?). When strokes are used, the transformation can represent sketches made by characters, while the stippling is great for B&W printed media, essentially being a superior form of B&W dithering. Unfortunately, due to how this algorithm works, it does not apply to coloured images. Or rather, it is, but the results are not good and don't look like anything in particular. Perhaps I'll find a solution to this later.

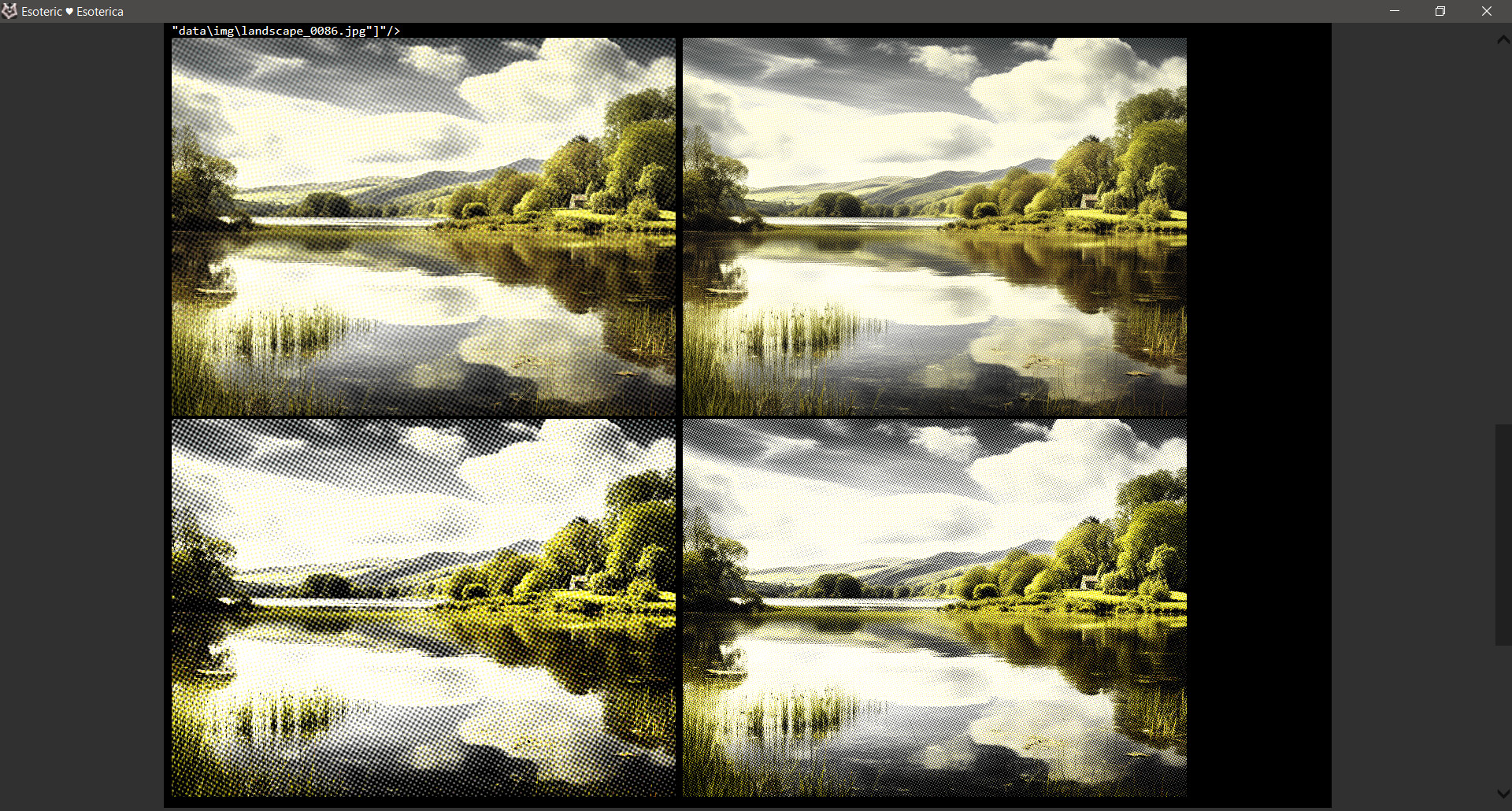

Capping off printed media representation for those more colourful images, this is a halftone transformation simulating the effects of screen printing in the CMYk colour space. The left pictures are slightly exaggerated, while the bottom ones present more colour bleeding. This transformation has interesting properties, including simulating the so-called "rosettes", which naturally emerge in screen printing.

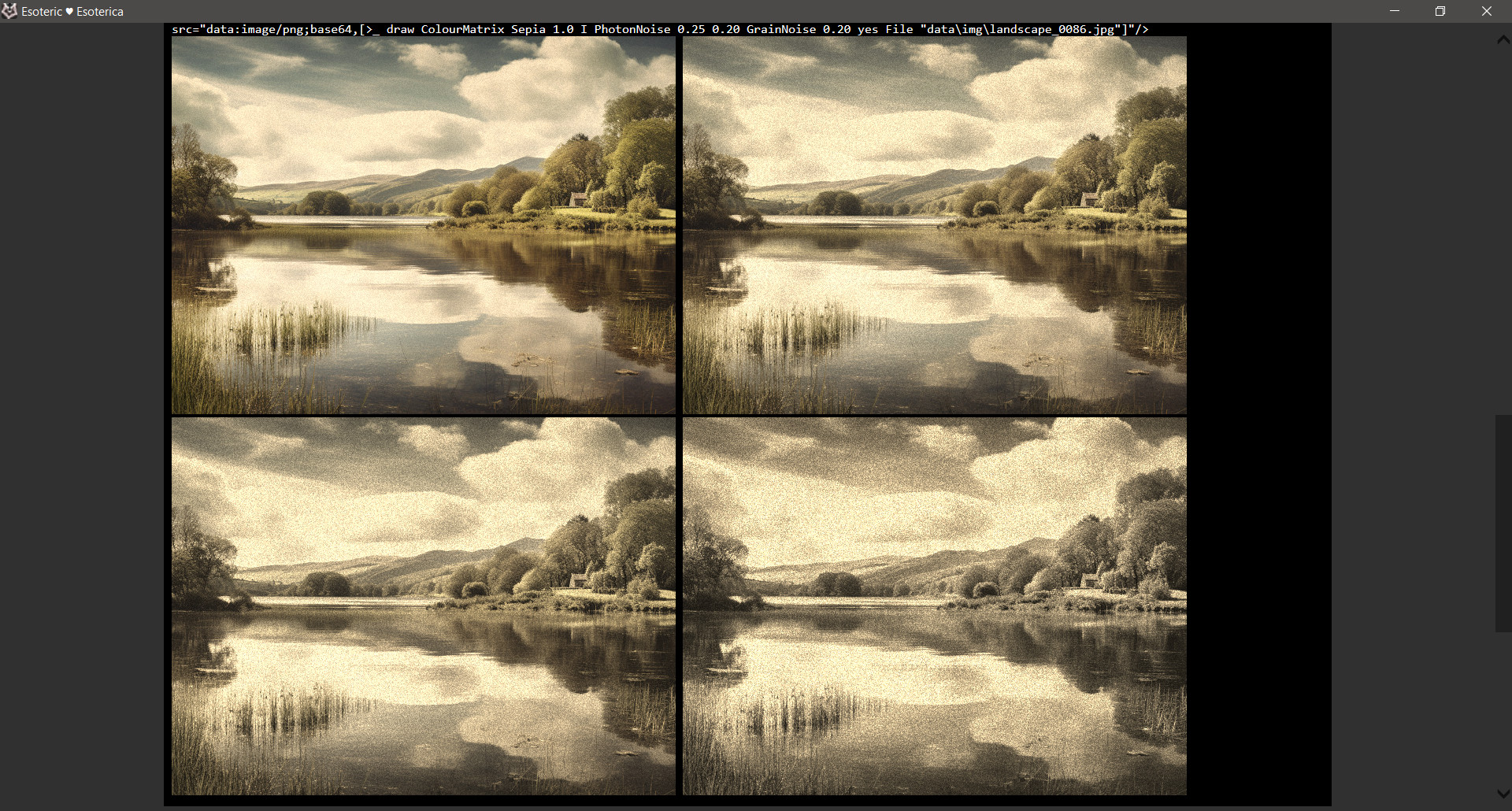

Finally, we can touch on some vintage photography. This transformation combines a sepia colour filter with simulations of noise found in photography to represent a progressively more decayed photo (the final image is somewhat exaggerated for this presentation!)

Adding vignetting to the image (as in the photography distortion rather than the purposeful application of the vignette effect) finishes the aged photo look.

I still have some other things in the works, but I'll let them rest for a while. There's no need to focus on this now, as I have what I need for the moment. For an application, let's compare these two documents. First, how it looked before...

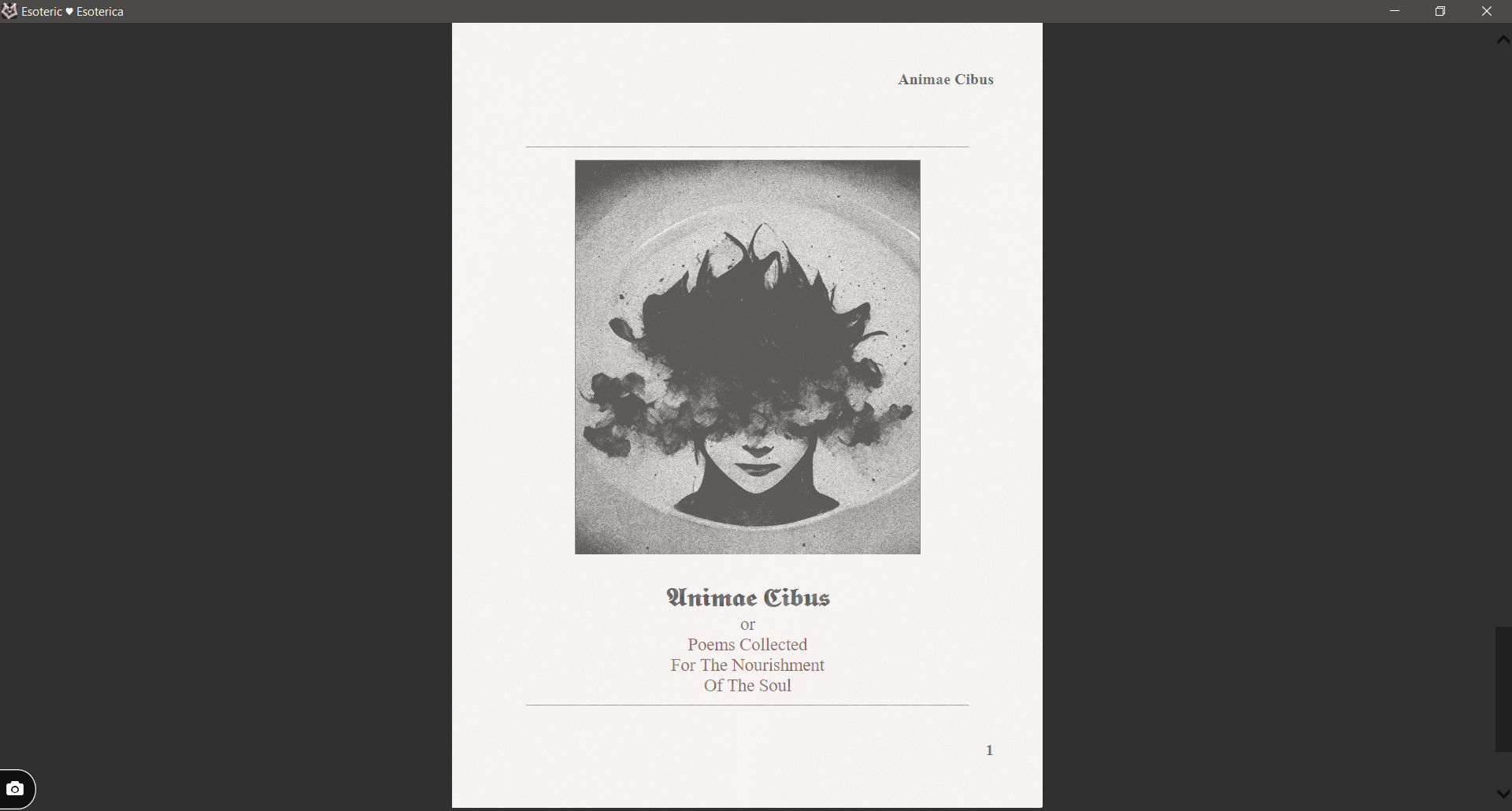

And after the application of some stippling.

Anyway, I am likely going to the hospital on the 8th; if that happens, the next update will be in two weeks. However, if things change and I don't need to do that after all, I'll make a routine update on the 7th. I'm sorry it has to be like this. My doctor is pretty insistent that I stay at the hospital for a while.

Get Esoteric ♥ Esoterica

Esoteric ♥ Esoterica

A story driven erotic game about magic, supernatural forces, love and BDSM.

| Status | In development |

| Author | EsoDev |

| Genre | Interactive Fiction |

| Tags | Erotic, Experimental, Fantasy, Female Protagonist, Mystery, Procedural Generation, Romance, Story Rich, Text based |

| Languages | English |

| Accessibility | Color-blind friendly, High-contrast |

More posts

- Grieving, Updates Paused1 day ago

- Esoteric Update #336 - Unfortunately English Grammar Exists4 days ago

- Esoteric Update #335 - Archetypes of Appearance12 days ago

- Esoteric Update #334 - Phrases Revisited19 days ago

- Esoteric Update #333 - Happy Independence Day25 days ago

- Esoteric Update #332 - Tooltip Triumphs33 days ago

- Esoteric Update #331 - Tooltip Troubles40 days ago

- Esoteric Update #330 - Reactive Character Creation47 days ago

- Esoteric Update #329 - And Out of the Hospital54 days ago

- Esoteric Update #328 - An Update From The Hospital61 days ago

Leave a comment

Log in with itch.io to leave a comment.